The UK is one of the most CCTV-saturated countries in the world. Being watched and monitored is an everyday reality on British streets, allegedly increasing from one camera for every 14 people in 2008 to one for every 11 people in 2013.

In other parts of the world, the spread of CCTV cameras and the data they collect is a matter of intense public debate. Just look at Germany, where services such as Google Street View are under serious scrutiny. But in the UK, the march of electronic surveillance is greeted as the obvious solution to crime – despite plenty of evidence to the contrary.

That is in real life, on the ground. But what about online?

You’re always being watched, everywhere

just before the summer break the Coalition government (with the tacit support of the Labour Party) pushed through the Data Retention and Investigatory Powers Act 2014 (DRIP) in less than three days.

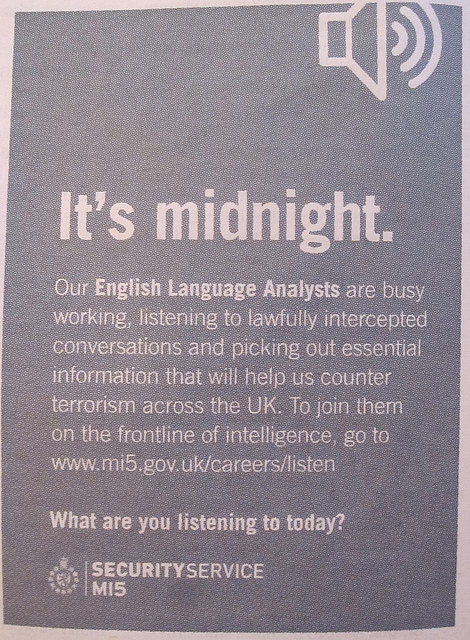

What this new legislation effectively does is legitimise the already highly questionable levels of surveillance that we have become inured to in public for use in the online environment. The difference is that while the “data” collected are not televisual images but “communications data”, they can nonetheless tell a snooper a lot about us – where we are at any point in time, who we contact and where our contacts are.

DRIP does this by legalising what critics of this bill have called “a degree of surveillance of a person of interest that totalitarian regimes, infamous for the extent and depth of their surveillance, could only have dreamt of”.

False sense of emergency

The reasons behind this outcry are the powers being granted to public authorities to access, or gain access, to our communication data at home and to require off-shore service providers to hand over this information. That is worrying enough for national and international watchdogs.

The outcry was also stirred by the way the Bill was rushed through parliament just before the summer recess – under the argument that its passage was a matter of emergency – then overshadowed by coverage of the cabinet reshuffle, which fully engulfed the day’s news cycle.

But the emergency Cameron and Clegg spoke of wasn’t a cabal of suspected terrorists, or goofy Twitter users “plotting” onlineand being mistaken for the real thing.

No, the “emergency” was the need to respond to a ruling by the European Court of Justice that criticised precisely the disproportionate levels of mass online surveillance that the DRIP law allows. It pointed out that such a degree of interception and snooping violates Articles 7 and 8 of the EU’s Charter of Fundamental Rights.

Of course, there is no reason to expect the British government to listen to the European Court of Justice – or, for that matter, to the international community. After all, this government has already made clear its position on European Union membership and the ECHR, continuing to flex its diplomatic muscles by insisting on doing it “our way”.

There is no more telling example than the government’s refusal to take full responsibility for the British intelligence service’s active participation in the NSA online surveillance programs.

Blatant abuse

The British government is complicit in the undermining of our fundamental freedoms and human rights online. It has accordingly born the brunt of criticism from high-level officials, such as Human Rights High Commissioner Navi Pillay.

Undeterred, the UK media and prominent politicians (bar notable exceptions) have justified the data surveillance ambitions of the British intelligence establishment and those of other US allies under the Five Eyes program with reference to that old chestnut:national security.

DRIP, a “thoroughly confusing piece of law, highly dangerous to privacy and a blatant abuse of democratic process”, as the founder of Privacy International Simon Davies put it, has effectively confirmed that the PRISM affair was a hardly an anomaly.

Whose security?

The use of the national security argument as an excuse for riding roughshod over fundamental freedoms enshrined in law underscores that the British political establishment, which voted for this law, has lost its moral compass.

The lack of public debate in the UK also underscores that many politicians, like most of us, are just not adequately clued up about how our digital imaginations do leave traces, and that these traces deserve respect and due process under the law.

The passing of this DRIP is a cynical misuse of democratic process that has implications for all of us in our online private lives. It is a piece of legislation that undermines bona fide effortsfrom intergovernmental organisations and civil society networksto stop the steady, and now rapid erosion of our rights online.

Whatever the justification, in a world where more and more of what we do, how we think and interact, and where we live our lives is happening online, or at the intersection of the online and offline, DRIP basically provides the government with carte blanche to access our personal communications data without due cause, due process, or adequate protection of our fundamental rights.

What concerns me right now is that outside the Twittersphere and blogosphere, there is a lack of sustained public debate in the mainstream media about this legislation and its precursor last year, the Data Communications Bill or Snoopers’ Charter.

This debate needs to be had, and in public. This is not the brave new world I want to live in. The data collection, retention, and surveillance possibilities offered by information and communication technologies should not give any state authority, or private service provider for that matter, the right to do with our data as it sees fit.

We, ordinary internet users of UK and of the world, need to unite against this misguided piece of legislation – and the brazen misuse of the democratic process that allowed it in.

Subscribe with…